Regulating Deep-Fake Porn: A Literary Approach

- Victoria Matthews

- May 16, 2021

- 7 min read

Victoria, an English and Classics student at Exeter College, Oxford, explores what representations in literature can tell us about the ethics of regulating hyper-realistic simulations of sexual offences.

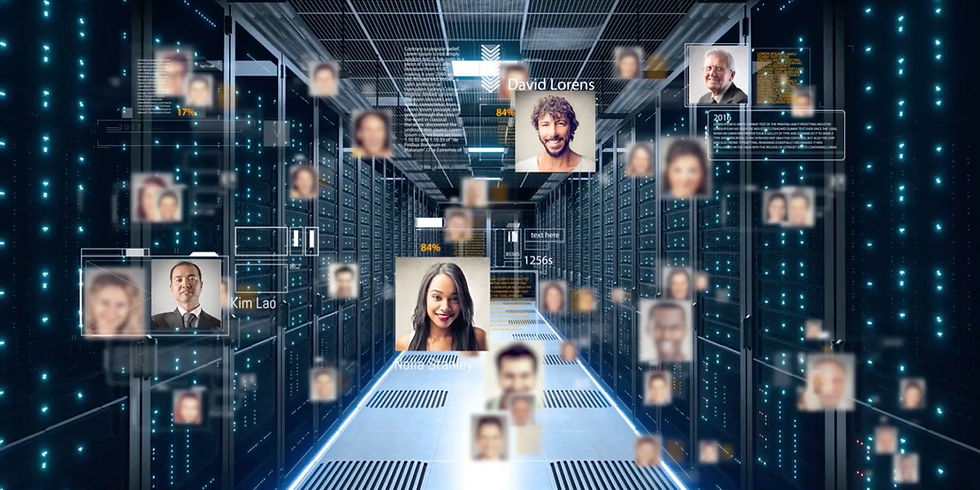

AI-generated media, such as Deepfake technology, offer myriad possibilities for the improvement of society. Deepfakes have been used, for instance, by Microsoft to create Seeing AI , an application that provides real-time audio description to the visually impaired. However, this technology has also garnered significant attention for its malicious deployment in controversies ranging from celebrity pornography scandals to fake news. The hyper-realistic simulation of sex by Deepfake pornography has exposed misuses of the technology that demand particularly urgent consideration by ethicists and regulatory authorities.

As a Classics and English student, I often find myself looking to the world of literature when reflecting upon the ethical and legal concerns with Deepfake pornography. The literary representation of real-life figures without their consent raises moral issues that have been much discussed. As a society, we must extend and adapt such debates to account for the problems posed by 21st-century media like Deepfakes. When we compare how literary and digital media represent sexual content, more questions than answers seem to emerge. Why, for example, do many readers feel morally comfortable with the literary representation of sexual fantasies, as in erotic fiction, yet vehemently disapprove when a sexual fantasy is reproduced digitally? Is this discrepancy in our instinctive moral assessments justified by the fact that the latter scenario is able to reproduce an individual in such a way as could be misperceived as real? Should both cases, in fact, be acceptable - but only for private, rather than public, consumption? We need to keep asking these questions, even if they make us uncomfortable. These questions demonstrate that, while STEM and humanities subjects are often considered to inhabit disparate worlds, they share concerns that are centrally relevant to the issue of regulating sexual offences as portrayed in AI-generated synthetic media.

When an author writes about a real-life individual, they necessarily perform violence to the reality of the person represented. Regardless of how “realistically” a novel or poem might describe the individual, this description will always be figured subjectively; so, even a literary character that is virtually indistinguishable from the person they represent will remain unlike their real-life counterpart simply by having been formulated in writing. Another feature of certain literary narratives, familiar to any avid reader, is that they offer a simulative and immersive experience, allowing us to feel “what it’s like” to know a character. This feeling, in turn, holds significant power to move us into reflection, belief or even action.

...as Deepfake technology provides more sophisticated ways for users to hyper-realistically depict individuals and to share these depictions at the click of a button, it is imperative that these ethics are given similar attention by science policy- makers.

The ethical implications that emerge when an individual is represented artistically without their consent, as, for example, in a novel, are of similar moral and legal concern to those that emerge when a non-consenting individual is rendered digitally. The author’s freedom to represent a subject of their choice, but the necessity that they do this accurately and fairly, marks a well-traversed legal area. The subject portrayed by this author must be protected against defamation and harassment, engaging concerns for their consent and privacy, but the author is simultaneously liberated through rights like those encapsulated in the U.S First Amendment. The ethics of representing subjects who are vulnerable to misrepresentation have been treated thoroughly in literary studies, as notably by Thomas Couser. However, as Deepfake technology provides more sophisticated ways for users to hyper-realistically depict individuals and to share these depictions at the click of a button, it is imperative that these ethics are given similar attention by science policy- makers.

Such regulatory urgency is particularly critical since the development of Deepfakes continues to facilitate the sexual harassment of non-consenting victims. A staggering 96% of all Deepfakes can be characterised as non-consensual pornography. Using AI and Deep Learning algorithms to superimpose victims’ faces onto pornography, this hyper-realistic form of video doctoring is now accessible to the average user as a result of programs like FaceApp. Available to the masses in this way, Deepfake technology has essentially made it possible for any user to reify their fantasy about another person, no matter how disturbing, into a convincing and shareable pornographic video.

Last year, I came to interview an academic for my tech ethics podcast, Moral I.T., who shared my concerns about the ethical minefield that is the Deepfake industry. Since Deepfake pornography is disproportionately wielded against women, Dr Carl Öhman stressed that any ethical evaluation of the harm it causes must be situated in the macro-context of how it perpetuates sexism. Approaches to the ethical issues with this phenomenon often also isolate the problem of authenticity, namely, that this technology problematises the average person’s ability to distinguish between a real recording and a Deepfake video. The crux of the authenticity problem is that a viewer of Deepfake pornography could reasonably believe that the victim who has been unwittingly doctored into the video actually engaged in the sexual acts depicted, a conviction that harms the victim’s reputation.

Policy-makers have demonstrated that they are not ignorant to the danger posed by Deepfake technology and its ability to create hyper-realistic simulations of acts categorised as sexual offences in real life. U.S Federal Law has already established ways to protect against the harm caused by virtual sexual offences that verge on being indistinguishable from reality, in particular, in its regulation of child pornography. This regulation criminalises any visual depiction of a child engaging in a sexual act that is ‘virtually indistinguishable such that an ordinary person viewing the depiction would conclude that [it] is of an actual minor engaged in sexually explicit conduct.’ The terms of this definition are adroitly framed so as to mitigate the same authenticity problem as that which so troubles ethicists concerning Deepfakes.

More specifically, strides are being taken towards regulating Deepfakes, including The DEEP FAKES Accountability Act in the U.S, currently a Bill under consideration by Congress. This Act would criminalise any Deepfake created without ‘an embedded digital watermark clearly identifying such record as containing altered audio or visual elements.’ The purpose of such a watermark is to ensure that, even if an online user were to encounter a hyper-realistic Deepfake pornography video, they would be immediately altered to the fact that this was a doctored video in which the pictured individual had not actually partaken in the sexual acts depicted.

However, the Accountability Act as it is proposed should trouble ethicists and policy-makers alike. For it is highly likely that as soon as the proposed Accountability Act has been approved, the protection it offers will be rendered defunct. This regulation will surely need to be updated in line with the evolving sophistication of the very technology that it concerns. One such evolution that must be anticipated is the inevitable integration of AI-generated synthetic media like Deepfakes with even more immersive technologies, such as Virtual Reality (VR) and Augmented Reality (AR).

A marriage between Deepfake technology and VR or AR has the potential to allow whole ‘synthetic realities’ to become commercially viable. Synthetic Reality (SR), if widely deployed in this way, could entirely eradicate our ability to distinguish between ‘real’ and ‘virtual’ representations of individuals, compounding the issue of authenticity to an extreme degree. Rapid regulatory action must be taken to prevent SR-generated pornographic Deepfakes from becoming widespread, even gamified. For when this occurs, the debate will be placed in sharper focus as to whether playing highly realistic, gamified synthetic realities, more than simply making users feel that the illicit sexual acts they experience are real, could even provoke them into enacting real-world sexual offences.

...it is vital that we as a society preemptively ensure our regulation sufficiently protects victims of non-consensual illicit cyber-attacks...

The current Accountability Act also stipulates that exempt from penalty are Deepfakes ‘appearing in a context such that a reasonable person would not mistake the falsified material activity for actual material activity of the exhibited living person, such as…fictionalized radio, television, or motion picture programming.’ This exemption somewhat optimistically demarcates a definitive distinction between ‘actual’ and malicious deployments of Deepfakes and ‘fictionalized,’ seemingly harmless ones. Yet in this dichotomy, how would one categorise a gamified rendering of a victim engaging in a sexual act to which they had not consented, made indistinguishable from reality by SR-generated Deepfakes? In this case, checking one box to describe this phenomenon, either marking it as an ‘actual material activity’ or a clearly ‘fictionalized’ scenario, is far from simple.

Since this hypothetical, hyper-realistic pornographic game would be playable, the terms of the Act suggest that it would be branded a ‘fictionalized’ context, considered akin to a highly realistic but fictional novel and thereby protected against any accountability. Yet the degree of immersion that this hypothetical gamer would experience through the fusion of Deepfake technology with virtual or augmented reality would be significantly superior to that offered by any novel, no matter how gripping a piece of literature it might be. As a result, even the most ‘reasonable person’ might struggle to delineate the game as either fabricated or actual.

The level of realism offered by technologies that are able to digitally and sexually reproduce individuals without their consent is only set to increase. With the recent news that Facebook are researching how augmented reality could become powered by users’ thoughts, it is clear that the distinction between natural reality and synthetic reality, between Deepfake pornography that is distinguishable and ‘virtually indistinguishable’ from real life, will continue to be eroded. Literary theory may prove a powerful tool in aiding policy makers to anticipate the calamitous possible effects of virtually simulating sexually immoral behaviour using technology. However, it is vital that we as a society preemptively ensure our regulation sufficiently protects victims of non-consensual illicit cyber-attacks whose ramifications extend far beyond the scope of the literature.

Comments